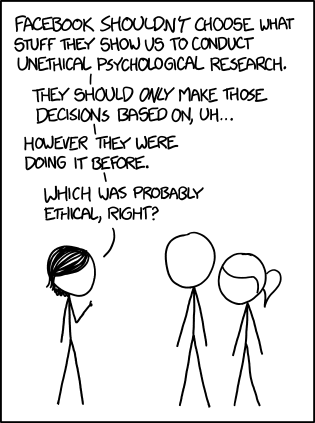

As this comic ‘Research Ethics’ from xkcd sums up, there is a lot of confusion (and ethical grey area) surrounding the role of companies like Facebook and how they control the flow of information in unprecedented ways. You may have come across reports about Facebook’s “emotional contagion” experiment and some anger and confusion over how this research was conducted and published. In this post I will link to some explanations about what happened and hypotheses on why it received the reaction it did. Why discuss a site most MSK staff can’t see from our desks? Basically, because this news highlights an interesting change in how we get information (as well as how we produce it) and I think it’s something worth considering.

As this comic ‘Research Ethics’ from xkcd sums up, there is a lot of confusion (and ethical grey area) surrounding the role of companies like Facebook and how they control the flow of information in unprecedented ways. You may have come across reports about Facebook’s “emotional contagion” experiment and some anger and confusion over how this research was conducted and published. In this post I will link to some explanations about what happened and hypotheses on why it received the reaction it did. Why discuss a site most MSK staff can’t see from our desks? Basically, because this news highlights an interesting change in how we get information (as well as how we produce it) and I think it’s something worth considering.

On Culture Digitally, Tarelton Gillespie weighs in with a post about the issue called, Facebook’s Algorithm – Why Our Assumptions Are Wrong, And Our Concerns Are Right. After linking to media coverage about the case (disclosing his relationship to researchers involved) and mentioning that the timing of this outcry has a lot to do with other recent news involving surveillance, he points out that we are just beginning to grapple with the increasing amount of algorithmically controlled information in our lives as society. Gillespie’s post is measured and makes some really good points about some of the ways this situation is new.

From Danah Boyd, is a more openly critical post (this particular post has been criticized for vilifying Facebook and for failing to mention Boyd’s work with data for other companies), What does the Facebook experiment teach us? There is Growing Anxiety About Data Manipulation wherein she questions why we are comfortable with corporations like Facebook using algorithms to manipulate information in their everyday operations but when they publish it as research we complain that it doesn’t meet the standard expectation that research done on people requires consent. She suggests corporate ethics boards consisting of scholars and users as well as staff.

An older post called Corrupt Personalization by Christian Sandvig digs into how Facebook and other corporate algorithms work (or seemed to in 2010) and tries to address the question of why people should care (via Berkman Center Fellow @smwat). Finally, from Barbara Fister’s Library Babel Fish blog on Inside Higher Education, a post that touches on the above issues and highlights open independent solutions (via @mnyc).

Ultimately, there may be no right or wrong here, but having more understanding of the information landscape (even just a little bit) certainly isn’t a bad thing.