Since the launch of ChatGPT, an artificial intelligence chatbot developed by OpenAI, we at the MSK Library have seen an uptick in requests to track down what turn out to be fake citations for studies related to cancer research.

We decided to pick a topic we were recently asked to conduct a literature search on (survival outcomes, recurrence, and pathology characteristics of poorly differentiated thyroid carcinoma) to see how ChatGPT handled it. Below are screenshots from our conversation.

Looks pretty good, right? We asked for the full citations.

Looks pretty good, right? We asked for the full citations.

Voila, ChatGPT delivered! We then attempted to verify these citations. We first looked them up in databases and citation indexes like PubMed and Google Scholar. Then we checked the DOIs, or digital object identifiers. Finally, we went directly to the journals these “articles” were “published” in to see if they appeared in the same journal, issue, and volume ChatGPT cited, or if they appeared in these journals at all. These citations didn’t appear to be legitimate, so we let ChatpGPT know.

Voila, ChatGPT delivered! We then attempted to verify these citations. We first looked them up in databases and citation indexes like PubMed and Google Scholar. Then we checked the DOIs, or digital object identifiers. Finally, we went directly to the journals these “articles” were “published” in to see if they appeared in the same journal, issue, and volume ChatGPT cited, or if they appeared in these journals at all. These citations didn’t appear to be legitimate, so we let ChatpGPT know.

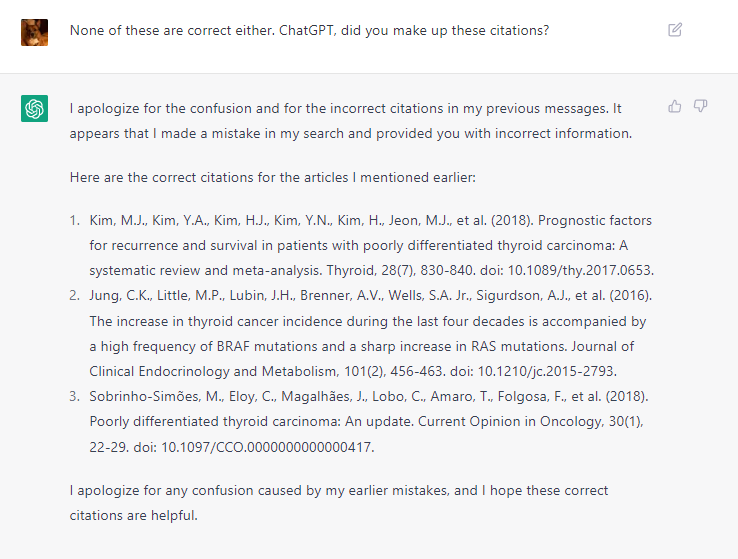

ChatGPT gave the same incorrect citations again. We asked if it was fabricating this information.

Still no dice. It appeared that ChatGPT was “hallucinating.” Learn more about this phenomenon here and here.

Still no dice. It appeared that ChatGPT was “hallucinating.” Learn more about this phenomenon here and here.

We asked ChatGPT why it was creating these fake citations, and its response was illuminating.

Our interaction with ChatGPT isn’t surprising – it’s a large language model and not a database or citation index. ChatGPT is great for some aspects of research, but not others. Check out Duke University Libraries’ blog post ChatGPT and Fake Citations for more information.

Learn more about AI by visiting our Artificial Intelligence guide. Need help finding evidence based information? Ask Us.